In the era of Artificial Intelligence, Large Language Models (LLMs) have redefined how machines understand, reason, and interact with human language. Yet, as organizations scale their AI applications, one challenge persists — how can we make these systems smarter, faster, and more reliable?

The answer lies in multi-stage LLM pipelines — a modular, layered approach to designing intelligent systems that go beyond single-prompt reasoning. At PMDG Technologies, we build next-generation AI solutions using this exact principle — ensuring every stage of processing adds precision, efficiency, and intelligence.

What Is a Multi-Stage LLM Pipeline?

A multi-stage LLM pipeline is a structured workflow that divides a complex AI task into smaller, sequential steps.

Instead of relying on a single model to do everything — from understanding queries to generating answers — each stage focuses on a specific function.

This modular architecture allows AI systems to:

- Validate and refine outputs at every step.

- Reduce computational costs by using smaller models where possible.

- Enhance accuracy and reliability through feedback loops and verification.

- Adapt easily to different tasks and domains.

In short, it’s about making LLMs think in layers — a concept that mirrors how humans solve problems.

Why Single-Stage AI Systems Fall Short

Single-stage systems try to do everything in one go: understand, reason, and respond in a single prompt. While this works for simple queries, it breaks down for complex real-world applications like document analysis, decision support, or multi-step reasoning.

Here’s why:

- 🧩 Lack of specialization: One model cannot perform all tasks optimally.

- ⚙️ High compute cost: Using large models for every query is inefficient.

- ⚠️ Error compounding: Without validation, one mistake ruins the entire output.

- 🔄 No feedback mechanism: The system cannot learn or self-correct dynamically.

This is where multi-stage pipelines transform AI from a reactive tool into an intelligent, adaptive system.

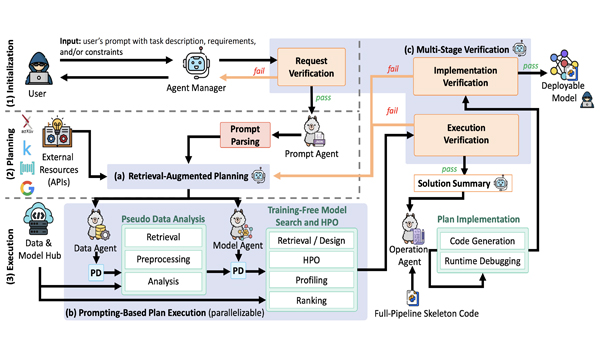

The Architecture of a Multi-Stage LLM Pipeline

A well-designed pipeline operates like an assembly line — each stage has a role and passes refined data to the next.

1. Data Ingestion & Preprocessing

Every AI journey begins with data. This stage cleans, normalizes, and structures data for better context understanding. It filters out noise, irrelevant content, and duplicates — setting a strong foundation.

2. Context Retrieval & Knowledge Augmentation

Here, vector databases and embedding models search for relevant documents or facts. This gives the LLM the right context before it starts reasoning — a key factor in improving factual accuracy.

3. Core Reasoning / Generation

This is where the main LLM operates — analyzing the input and generating a coherent, context-aware response. By feeding the model enriched data from earlier stages, you reduce hallucinations and improve relevance.

4. Evaluation & Validation

Before the output reaches users, a secondary model (or a human reviewer) validates it. This stage checks for factual correctness, tone, safety, and compliance — ensuring enterprise-grade reliability.

5. Post-Processing & Optimization

Finally, the response is polished for clarity, formatting, and style. The output might also go through SEO optimization or formatting for end-user delivery (for example, chatbot display, API response, or web article).

Benefits of Multi-Stage Pipelines

When implemented strategically, multi-stage LLM pipelines deliver measurable advantages:

✅ Improved Accuracy: Layered reasoning ensures that outputs are cross-verified.

⚡ Higher Efficiency: Lightweight models handle simple tasks; heavy models only do complex reasoning.

🔍 Transparency: You can trace where an error occurred in the pipeline.

💰 Cost Optimization: Smart orchestration reduces token usage and compute time.

🧠 Continuous Learning: Feedback loops improve performance over time.

At PMDG Technologies, our AI frameworks use similar architectures to automate customer interactions, optimize workflows, and drive intelligent decision-making across industries.

How Multi-Stage Pipelines Improve Real-World AI

Let’s take an example — automated document understanding for compliance and audit workflows.

- Stage 1: The system extracts text and metadata from uploaded files.

- Stage 2: It classifies the document type and identifies key entities (like invoice numbers or clauses).

- Stage 3: A reasoning model checks if all required compliance elements are present.

- Stage 4: A validator LLM cross-checks anomalies and flags inconsistencies.

- Stage 5: A summarizer generates a human-readable report.

The result? Faster document processing, higher accuracy, and full audit transparency.

This multi-stage approach is not limited to compliance — it powers AI agents, workflow automation, customer service bots, and decision-support systems.

Designing Smarter Pipelines: Best Practices

At PMDG, our AI architects follow a set of principles to ensure pipelines perform at enterprise scale:

- Start modular: Design each stage as an independent service.

- Leverage prompt chaining: Connect reasoning steps logically, passing context between models.

- Use uncertainty thresholds: Forward uncertain responses for deeper review.

- Implement monitoring: Track accuracy, latency, and token usage per stage.

- Optimize for feedback: Continuously retrain and refine based on user interactions.

Transitioning from single-stage to multi-stage design improves reliability and reduces the cost-to-serve over time — making it ideal for scalable enterprise AI.

The Future of Multi-Stage AI Systems

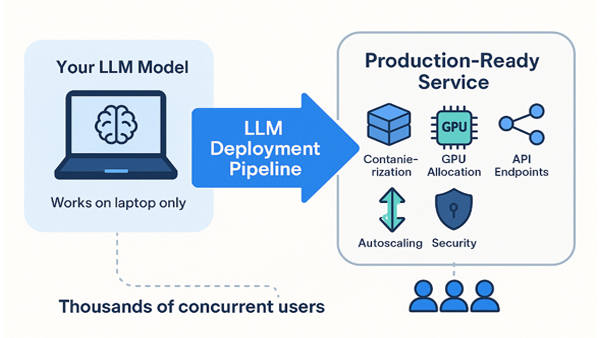

The next evolution of multi-stage pipelines will include autonomous orchestration, where AI agents decide which models or tools to call dynamically. These “self-routing” pipelines will adjust based on task complexity, context, and performance.

Moreover, hybrid pipelines — combining symbolic reasoning (rules, logic) with neural reasoning (LLMs) — will bring the best of both worlds: factual accuracy and creative flexibility.

As AI continues to mature, multi-stage pipelines will become the standard architecture for all high-performance systems — from chatbots and recommendation engines to autonomous decision platforms.

Conclusion

Building smarter AI systems requires more than just powerful models — it demands structured intelligence.

By embracing multi-stage LLM pipelines, organizations can achieve scalable, explainable, and human-aligned AI.

At PMDG Technologies, we specialize in designing and deploying these intelligent pipelines — combining advanced model orchestration, real-time validation, and human-in-loop workflows to deliver consistent, business-ready results.

Ready to build your smarter AI ecosystem?

👉 Visit www.pmdgtech.com to learn how PMDG Technologies can help transform your AI vision into action.

Leave a Reply